Intel is set to release its Gaudi 3 AI accelerator in the second quarter, partnering with Dell Technologies, HPE, Lenovo, and Supermicro for system integrations. The launch of Gaudi 3, unveiled at the Intel Vision conference, is part of Intel’s strategy to compete with Nvidia in AI training and inference workloads.

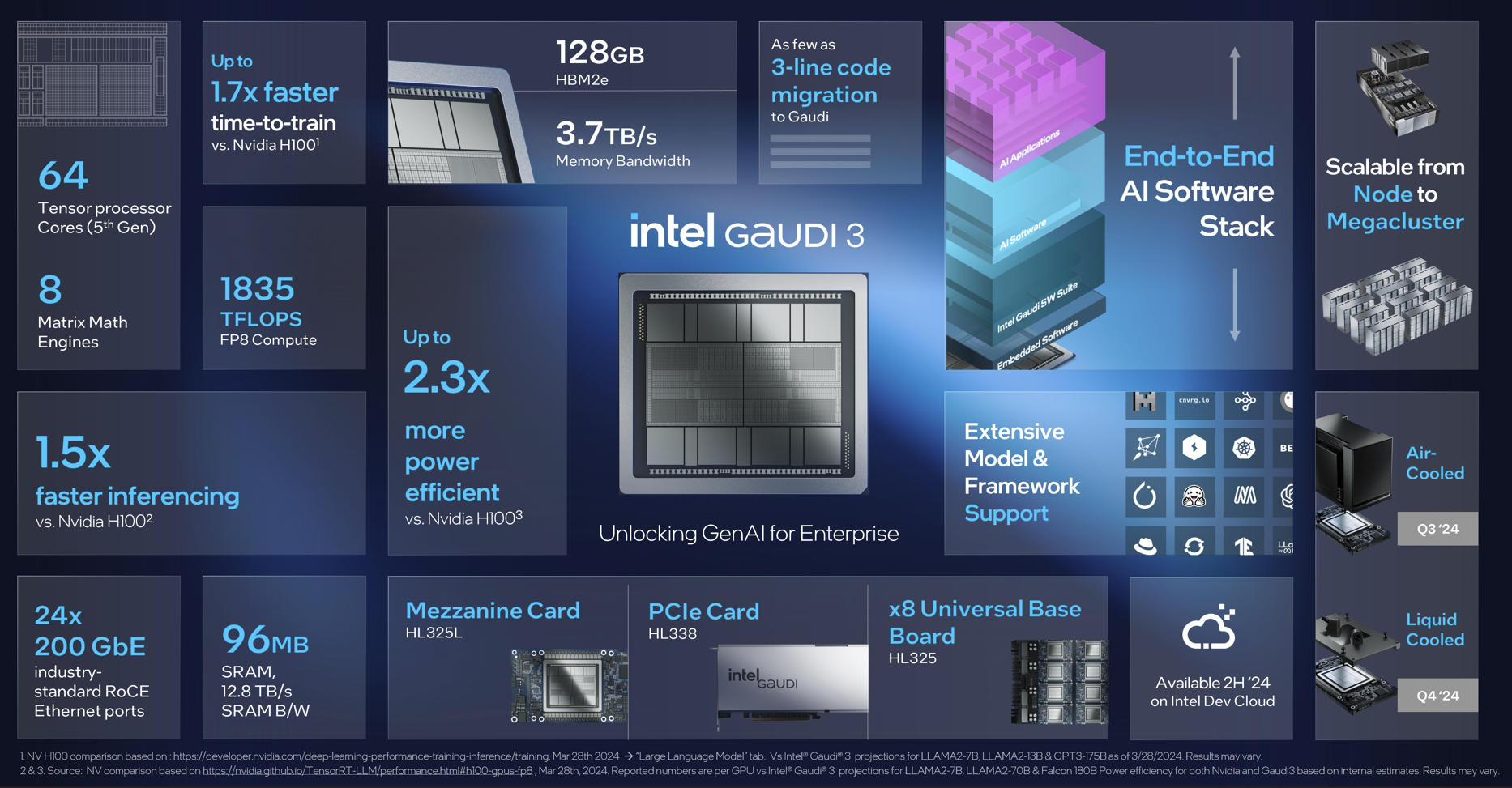

Gaudi 3 boasts 50% better average inference and 40% better average power efficiency compared to Nvidia’s H100, all at a lower cost. Despite Nvidia’s Blackwell GPUs offering improved performance, enterprises will have a range of options for AI workloads, including offerings from AMD and in-house solutions from AWS and Google Cloud.

Key features of Intel’s Gaudi 3 include being manufactured on a 5 nm process, utilizing parallel engines for deep learning compute, a compute engine with 64 AI-custom tensor processor cores and eight matrix multiplication engines, enhanced memory for generative AI processing, integrated Ethernet ports for networking speed, PyTorch framework integration, and PCIe add-in cards.

In addition to the Gaudi 3 launch, Intel is collaborating with companies like SAP, RedHat, and VMware to create an open platform for enterprise AI. They are also engaging with the Ultra Ethernet Consortium to develop network interface cards and AI connectivity chiplets for improved AI infrastructure. With the rise of AI technology, Intel’s Gaudi 3 accelerator marks a significant step forward in the AI hardware market.